10 Strategies for Succeeding in Talk Egypt Business Magazine

As an entrepreneur or business professional looking to make a mark in Egypt’s dynamic business landscape, getting featured in Talk …

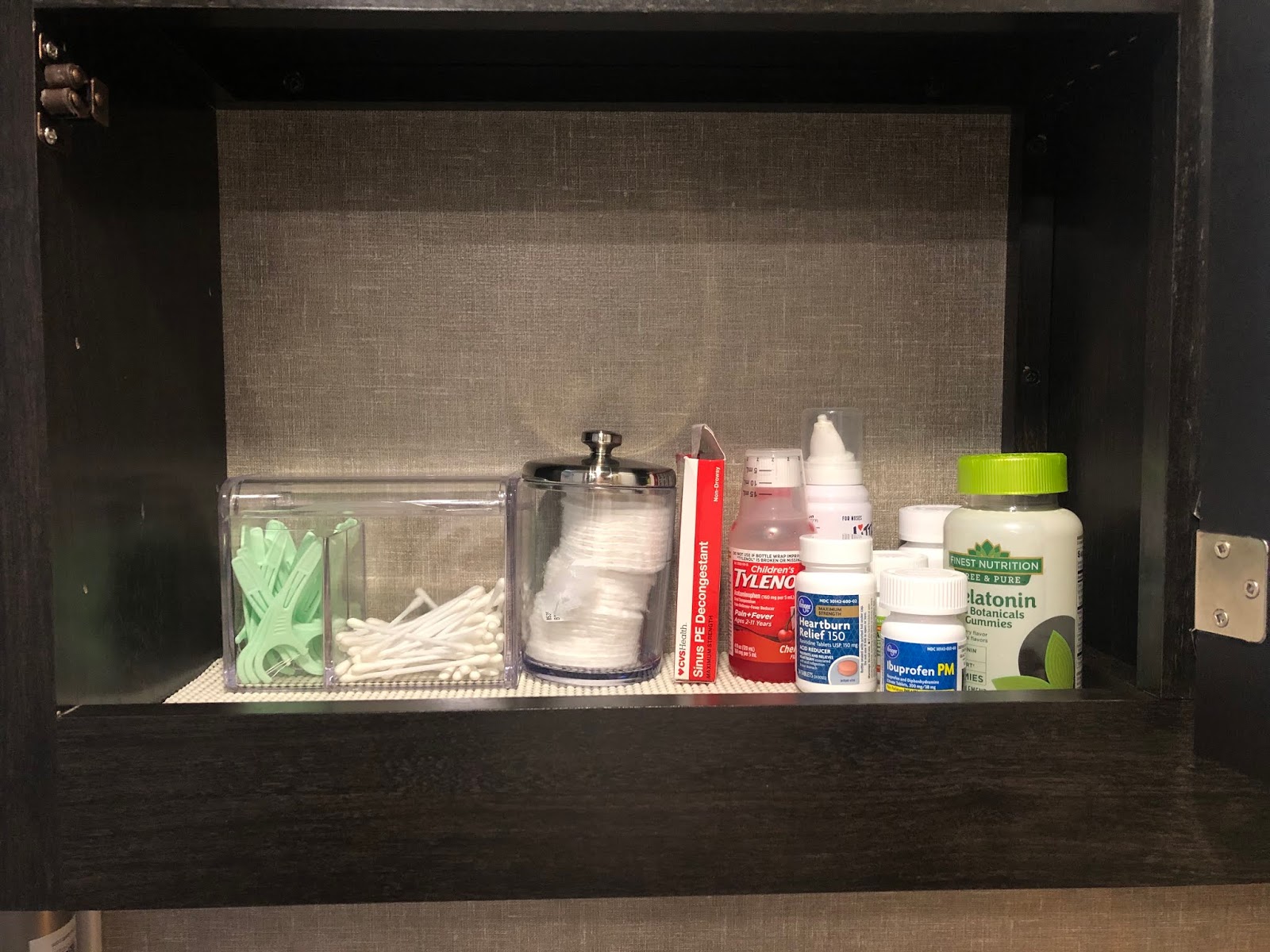

Having an RV medicine cabinet organizer is essential for a smooth and hassle-free travel experience. When you’re on the road, …

Having an medicine cabinet organizer insert is not only aesthetically pleasing, but it also has several practical benefits. When your …

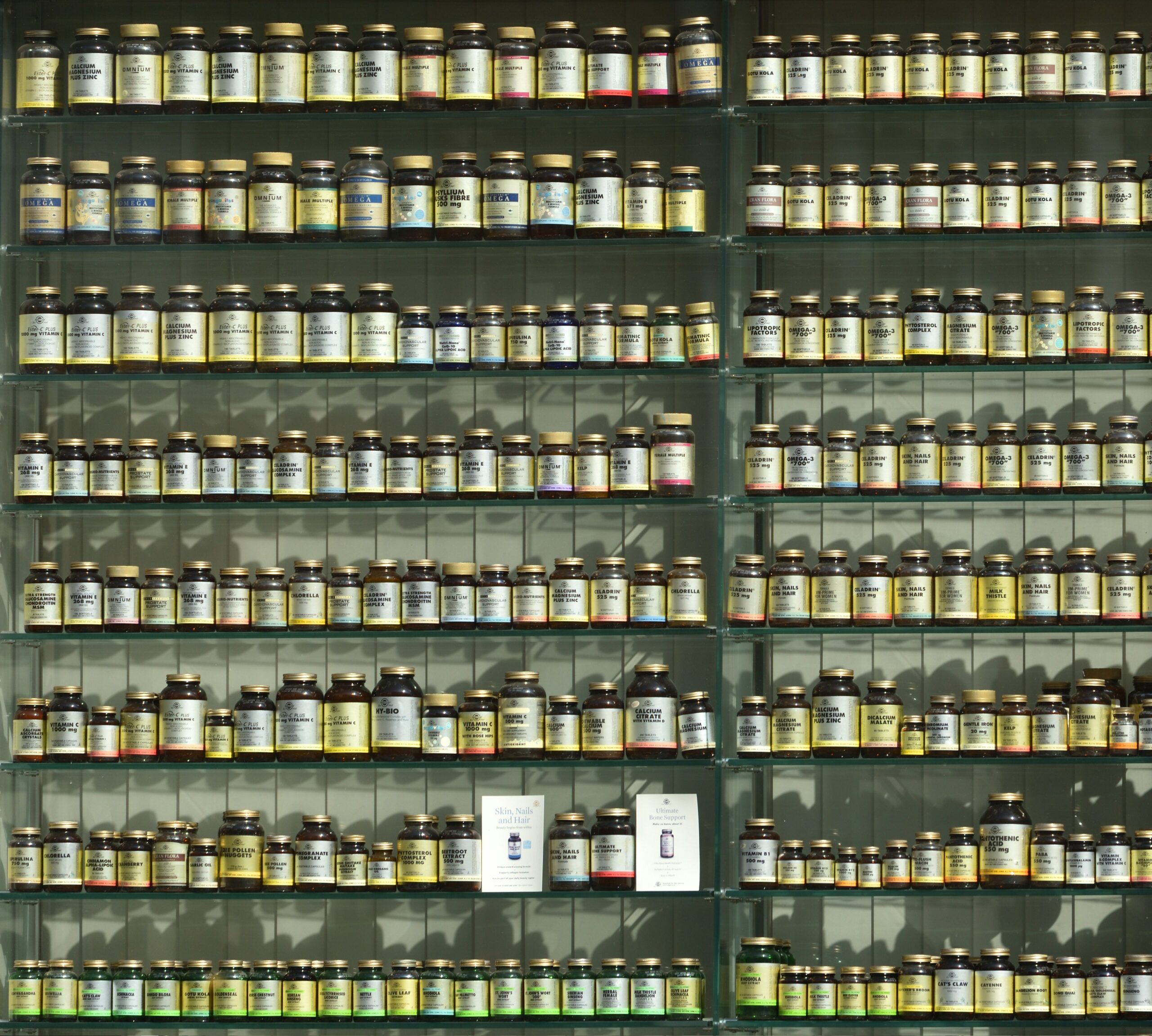

Building strong and healthy bones is essential for a vibrant and active life. Liquid calcium magnesium supplement are two vital …

Are you looking for the perfect trio to achieve optimal health and wellness? Look no further than Vitamin d calcium …

Are you looking for a natural way to boost your overall health and well-being? Look no further than a potassium …

Are you tired of the same old routine? Do you crave something different, something extraordinary? Look no further than Hews …

Welcome to the ultimate Friends Lifestyle Lounge, where relaxation and fun await! Nestled in a tranquil oasis, this exclusive lounge …

Welcome to “Unwind in Style: The Ultimate Lifestyle Lounge Reviews,” where we will take you on a journey through the …